The ENVI Deep Learning 3.0 release includes changes to the Pixel Segmentation workflows that improve the user experience by saving time and improving results. This topic describes those changes, and provides instructions for converting ENVI Deep Learning API and ENVI Modeler models created in versions 2.1 or older to ENVI Deep Learning 3.0.

At a high level, several Pixel Segmentation training parameters were removed and were replaced by new, simplified controls for training a model. API tasks were renamed to coincide with display names in the User Interface to avoid confusion when using the tasks in the ENVI Modeler. Also, the InitializeENVINet5MultiModel task and the Initialize Pixel Segmentation Model dialog were removed. These were previously required when training a model from scratch.

See the following sections for details:

ENVI Modeler Models - Pixel Training

This section provides a simple use case for training a deep learning model and describes how to migrate version 2.1 nodes to version 3.0.

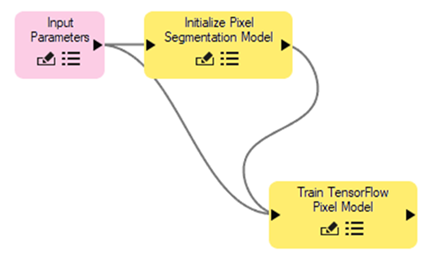

The example below uses nodes the following nodes from 2.1: Input Parameters, Initialize Pixel Segmentation Model, and Train TensorFlow Pixel Model.

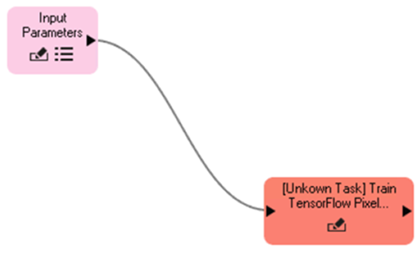

ENVI Deep Learning 2.1:

ENVI Deep Learning 3.0:

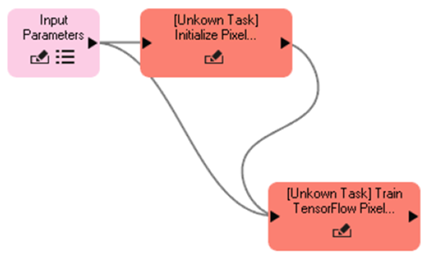

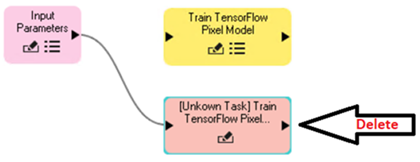

When you open the same model in ENVI Deep Learning 3.0, it will result in unknown task errors, as seen in the image below.

Use the steps that follow to port your ENVI Modeler model to ENVI Deep Learning 3.0.

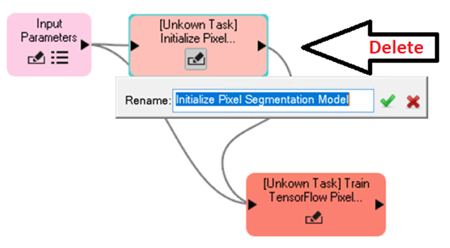

Step 1

Remove the obsolete Initialize Pixel Segmentation Model node. With your model file open, locate the red node with the text [Unknown Task] Initialize Pixel and press the Delete key.

In the simple example below, the Initialize Pixel Segmentation Model node is removed and the only remaining unknown task node is Train TensorFlow Pixel Model.

Step 2

Add a new node for the updated task.

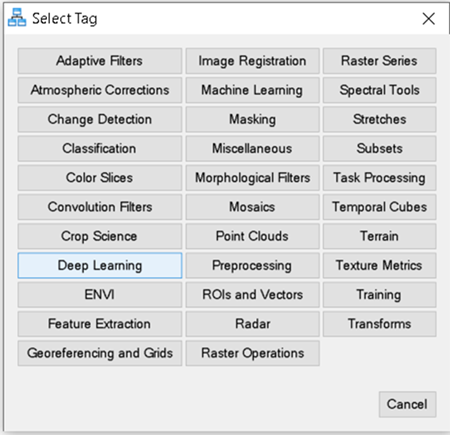

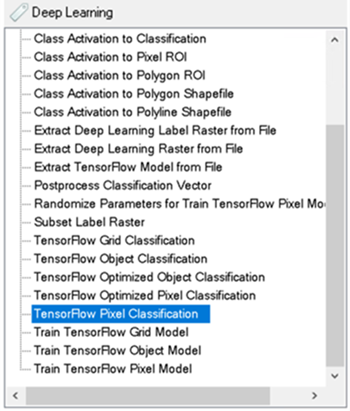

In the ENVI Modeler Search field under the Tasks section, enter Train TensorFlow Pixel Model, then select it from the list.

You can also search by tag by clicking the Filter by Tag button (shown next to the arrow in the image above). The Select Tag dialog appears. Click the Deep Learning tag to populate the list with the available Deep Learning tasks.

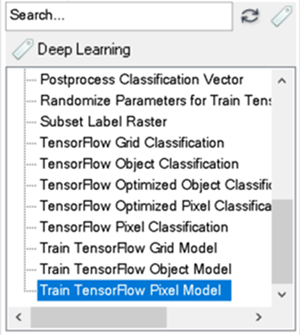

In the ENVI Modeler search list, scroll to the bottom and double-click to select Train TensorFlow Pixel Model from the list.

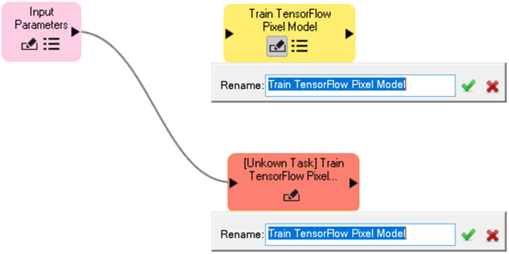

The new node, Train TensorFlow Pixel Model, in yellow, is added to the modeler. Select the Edit pencil icon in the bottom left of the node. You will see that the display name for the old task (red) and the new task (yellow) are identical. The old task name was Train TensorFlow Mask Model, which was renamed in 3.0 to Train TensorFlow Pixel Model to match the display name. This will eliminate confusion between API task names and ENVI Modeler display names going forward.

Step 3

Remove the obsolete Train TensorFlow Mask Model node, but before you delete the node, take note of which nodes in your model connect to its input and output. If you have a complex workflow, it is recommended that you review and take note of the node parameters in an older version of ENVI Deep Learning before making the changes. Deleting the old node will remove all connections to input and output task parameters, so you will need to reconnect the input and output of the new node in the same manner as the old node.

Select the node with the text [Unknown Task] Train TensorFlow Pixel, colored red, and press the Delete key.

Step 4

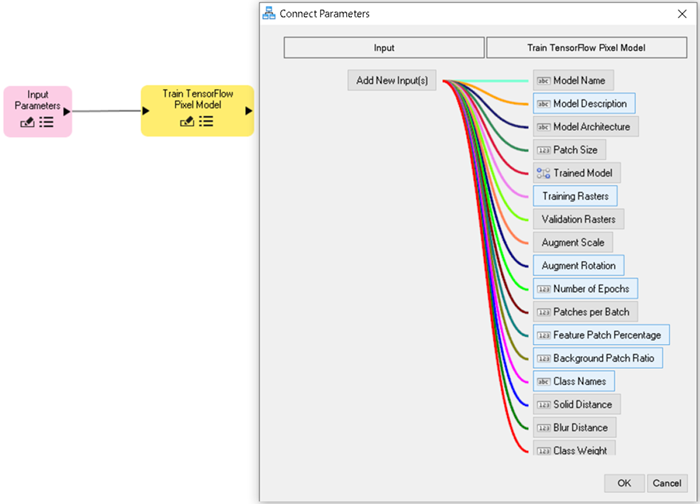

Connect the new Train TensorFlow Pixel Model node input parameters. For the simple example used here, all parameters will be connected as new inputs.

Drag and drop the Input Parameters output arrow (right side of the node) onto the input arrow on the left side of Train TensorFlow Pixel Model node. The Connect Parameters dialog appears, providing a buttons for connecting new inputs.

To add new connections, click on the task buttons under the column Train TensorFlow Pixel Model.

Some parameters have been removed in 3.0 and new parameters have been added. For context, the removed tasks of Initialize TensorFlow Model and Train TensorFlow Mask Model, were combined into a single new task called Train TensorFlow Pixel Model. The table below shows the changes. See Train TensorFlow Pixel Model Task for full parameter details.

|

Parameter Name |

Parameter Origin

|

Status |

|

Number of Bands

|

Initialize TensorFlow Model

|

Obsolete |

|

Number of Classes

|

Initialize TensorFlow Model

|

Obsolete |

|

Patches per Epoch

|

Train TensorFlow Mask Model

|

Obsolete |

|

Patch Sampling Rate

|

Train TensorFlow Mask Model

|

Obsolete |

|

Input Model

|

Train TensorFlow Mask Model

|

Obsolete |

|

Model Name

|

Train TensorFlow Model

|

Migrated |

|

Model Description

|

Train TensorFlow Model

|

Migrated |

|

Model Architecture

|

Train TensorFlow Model

|

Migrated |

|

Patch Size

|

Train TensorFlow Model

|

Migrated |

|

Training Rasters

|

Train TensorFlow Mask Model

|

Migrated |

|

Validation Rasters

|

Train TensorFlow Mask Model

|

Migrated |

|

Augment Scale

|

Train TensorFlow Mask Model

|

Migrated |

|

Augment Rotation

|

Train TensorFlow Mask Model

|

Migrated |

|

Number of Epochs

|

Train TensorFlow Mask Model

|

Migrated |

|

Patches per Batch

|

Train TensorFlow Mask Model

|

Migrated |

|

Class Name

|

Train TensorFlow Mask Model

|

Migrated |

|

Solid Distance

|

Train TensorFlow Mask Model

|

Migrated |

|

Blur Distance

|

Train TensorFlow Mask Model

|

Migrated |

|

Class Weight

|

Train TensorFlow Mask Model

|

Migrated |

|

Loss Weight

|

Train TensorFlow Mask Model

|

Migrated |

|

Feature Patch Percentage

|

Train TensorFlow Pixel Model

|

New |

|

Background Patch Ratio

|

Train TensorFlow Pixel Model

|

New |

|

Trained Model

|

Train TensorFlow Pixel Model

|

New |

Step 5

Connect output parameters from task node Train TensorFlow Pixel Model.

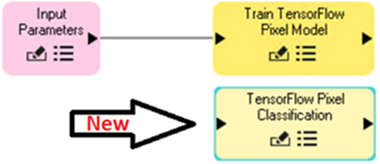

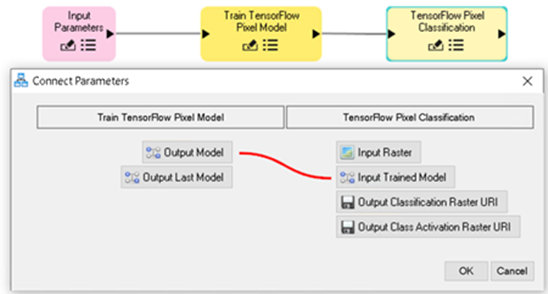

Depending on your workflow, the output model parameters may be input to a classification node or an output node. For any cases you should be able to seamlessly connect the output from the training node to any previous connection you may have had. Below is an example of connecting to a classification task node TensorFlow Pixel Classification.

From the list of Deep Learning tasks listed in the Tasks Search list, double-click TensorFlow Pixel Classification.

A new TensorFlow Pixel Classification node appears in the ENVI Modeler. The node is not connected by default.

Connect the TensorFlow Pixel Classification node by dragging and dropping the output arrow on the right side of the Train TensorFlow Pixel Model node onto the input arrow of node TensorFlow Pixel Classification (left side arrow). The Connect Parameters dialog appears, showing connection options for Output Model and Output Last Model. In this example, the Output Model parameter is selected. This output represents the best output model based on validation loss during the training process. Click Output Model on the left side under the Train TensorFlow Pixel Model column. By default, this will automatically connect to Input Trained Model under the TensorFlow Pixel Classification column. This will make the output model from training the input model to classification. After making the selection and updating any other parameters for your workflow, click OK to complete the connections.

For simplicity this guide does not go into detail regarding all possible connections, there are many ways to build a workflow, which is beyond the scope of this guide.

ENVI Deep Learning Task - API

This section refers to a basic training workflow using the ENVI Deep Learning task API to train a model. The goal of this section is to walk through example code written in ENVI Deep Learning 2.1, and show the steps required to migrate it to ENVI Deep Learning 3.0.

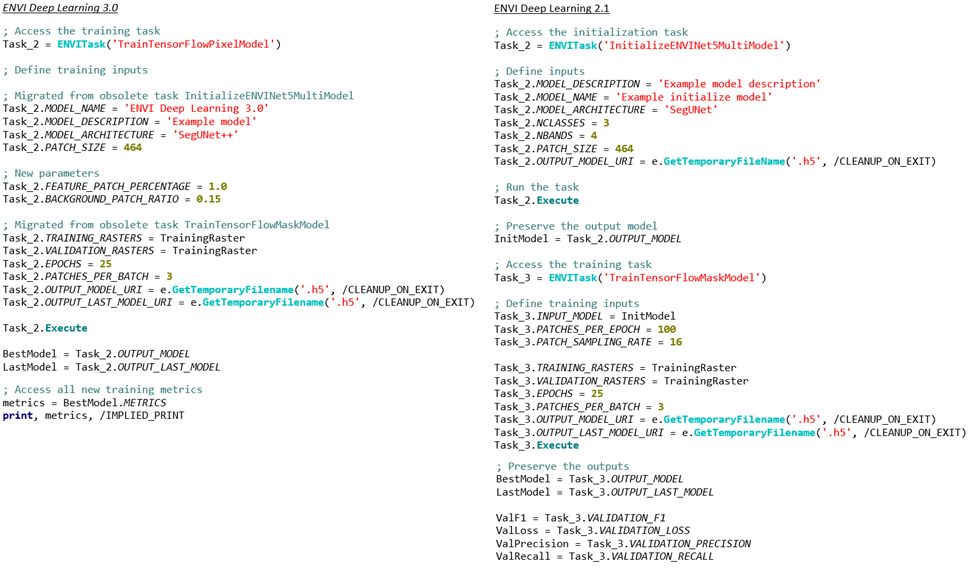

The image below shows a side by side quick comparison of the task API, with ENVI Deep Learning 3.0 on the left and 2.1 on the right.

The example code is intended to be a simple example for explaining the migration process, not for generating any useful outputs. Data files included with the ENVI installation will be used as the training data. The data files used are:

C:\Program Files\NV5\ENVI60\data\qb_boulder_msi

C:\Program Files\NV5\ENVI60\data\qb_boulder_roi.xml

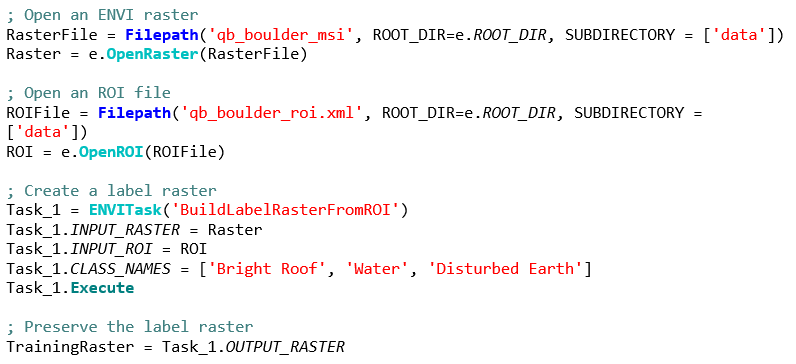

Step 1. (Data Preparation)

The process for creating ENVI Deep Learning labeled data has not changed from 2.1 to 3.0 and can be applied the same way for both versions.

Using the data files qb_boulder_msi and qb_boulder_roi.xml, run the BuildLabelRasterFromROI task. Running the example below will generate a single label raster suitable for training a pixel segmentation model. Alone, the generated training raster is not enough data to produce a usable model, so it is for demonstration purposes here only.

Existing data creation code for ENVI Deep Learning does not need to change, this is just the first step in the training process.

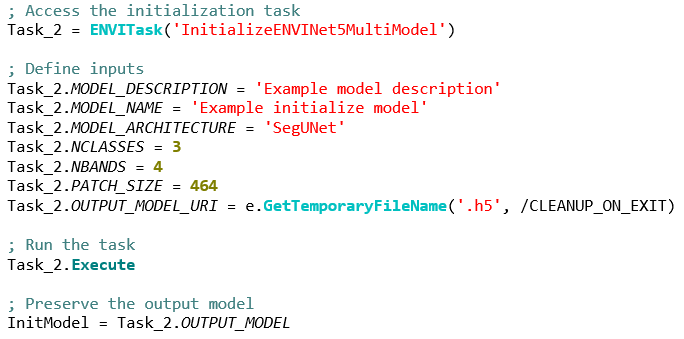

Step 2. (Obsolete: Model Initialization)

In ENVI Deep Learning 2.1 and older, it was required to create an initial model before training an actual model. The task InitializeENVINet5MultiModel is no longer valid starting in ENVI Deep Learning version 3.0. In its place, four initialization task parameters were integrated in the new training task TrainTensorFlowPixelModel.

The code snippet shown below is no longer required or usable with ENVI Deep Learning 3.0; it can be removed from any API code you have written. When migrating code to the latest version of ENVI Deep Learning, take note of the following parameter values that now exist in the 3.0 training task. You may want to retain the values and set them accordingly in Step 3 later.

-

MODEL_ARCHITECTURE

-

MODEL_DESCRIPTION

-

MODEL_NAME

-

PATCH_SIZE

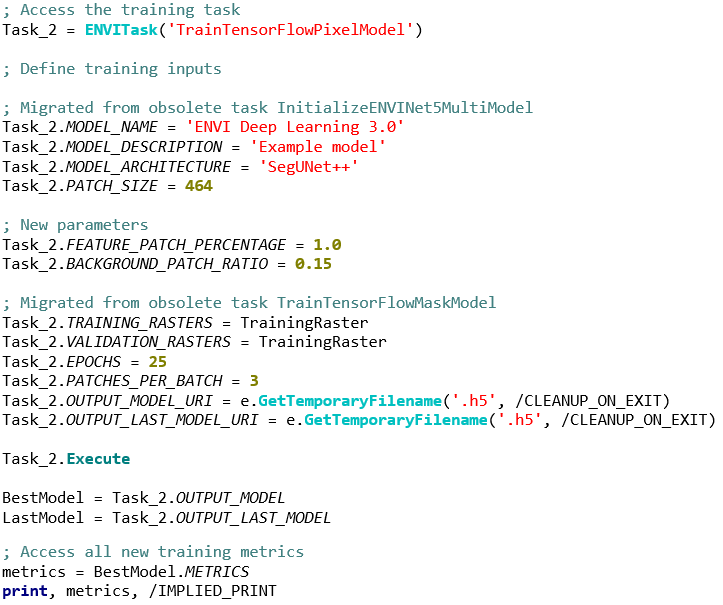

Step 3. (Training)

This section describes the major changes to the pixel segmentation training task. Described below are differences between ENVI Deep Learning 2.1 and 3.0. The major changes include a new task named TrainTensorFlowPixelModel, and the removed task TrainTensorFlowMaskModel. Additionally, multiple parameters have been removed and new ones were added.

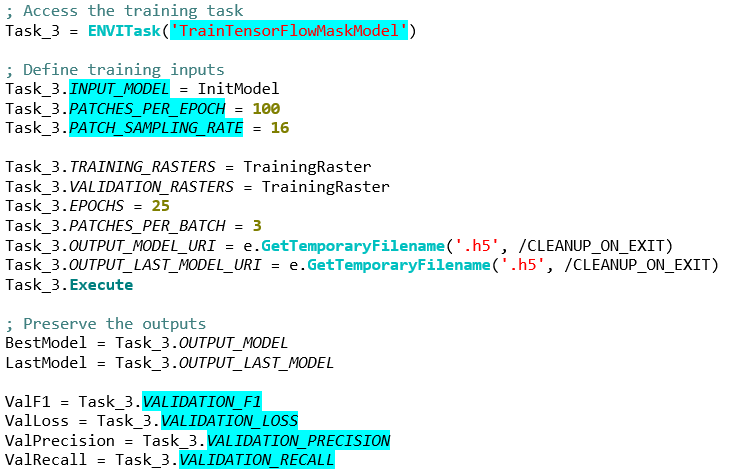

The code snippet below shows obsolete parameters that have been removed or renamed, highlighted in Cyan.

Removed Input Parameters:

-

INPUT_MODEL

-

PATCHES_PER_EPOCH

-

PATCH_SAMPLING_RATE

Removed Output Parameters

-

VALIDATION_F1

-

VALIDATION_LOSS

-

VALIDATION_PRECISION

-

VALIDATION_RECALL

ENVI Deep Learning 2.1:

ENVI Deep Learning 3.0:

ENVI Deep Learning Task - Dialogs

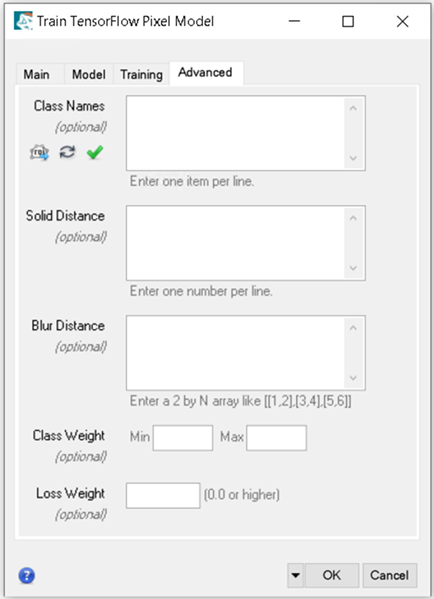

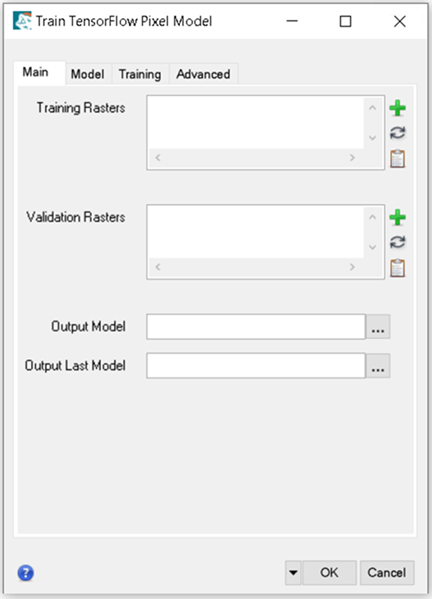

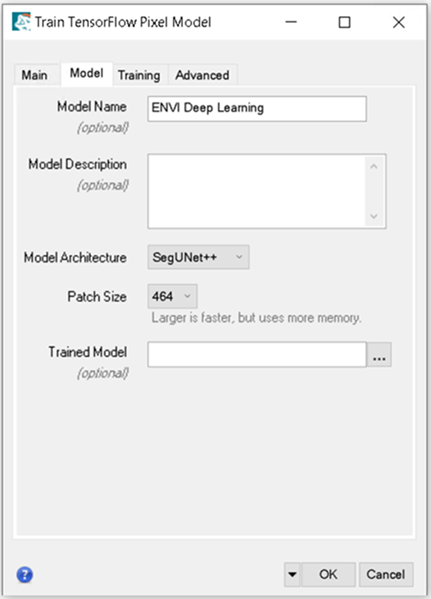

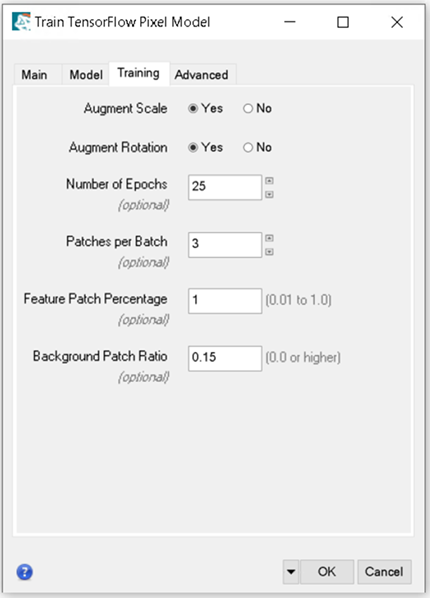

Training tasks in ENVI Deep Learning 3.0 have been changed to use ENVI task tabs to organize the parameters that are available. The training tasks Train TensorFlow Pixel Model, Train TensorFlow Object Model, and Train TensorFlow Grid Model now all use tabs. This section is a guide for understanding how the training task parameters are organized. There are four available tabs in the training tasks: Main, Model, Training, and Advanced.

The tabs of the training dialogs is shown below, using the Train TensorFlow Pixel Model dialog. See full details on the parameters for training each model type inTrain TensorFlow Grid Models, Train TensorFlow Object Models, and Train TensorFlow Pixel Models.

Main Tab

The parameters on the Main tab are the minimal set of parameters that must be set for most training without modifying any additional parameters. The training and validation datasets are the minimal requirement for training a model with ENVI Deep Learning.

Model Tab

The Model tab has Model Name and Model Description parameters, plus additional settings that are specific to the type of model that will be created. For example, the Train TensorFlow Pixel Model and Train TensorFlow Grid Model offer different model architectures and sizes.

Training Tab

The Training tab consists of tuning parameters that control the amount of data regarding foreground, background, augmentation, and the duration of training.

Advanced Tab

The Advanced tab contains parameters for data manipulation during the training process.